Meta has recently released Code Llama 70B, the latest update to the company’s open-source artificial intelligence (AI) coding model. Announcing the release, the California-based tech conglomerate called it “the largest and best-performing model in the Code Llama family.” As per the company’s report, Code Llama 70B scored 53 percent in accuracy on the HumanEval benchmark, highlighting capabilities nearing OpenAI’s GPT 4 that scored 67 percent. The latest AI assistant joins the company’s existing coding models Code Llama 7B, Code Llama 13B, and Code Llama 34B.

Meta CEO Mark Zuckerberg announced Code Llama 70B via a Facebook post and said, “We’re open sourcing a new and improved Code Llama, including a larger 70B parameter model. Writing and editing code has emerged as one of the most important uses of AI models today. [..] I’m proud of the progress here, and looking forward to including these advances in Llama 3 and future models as well.”

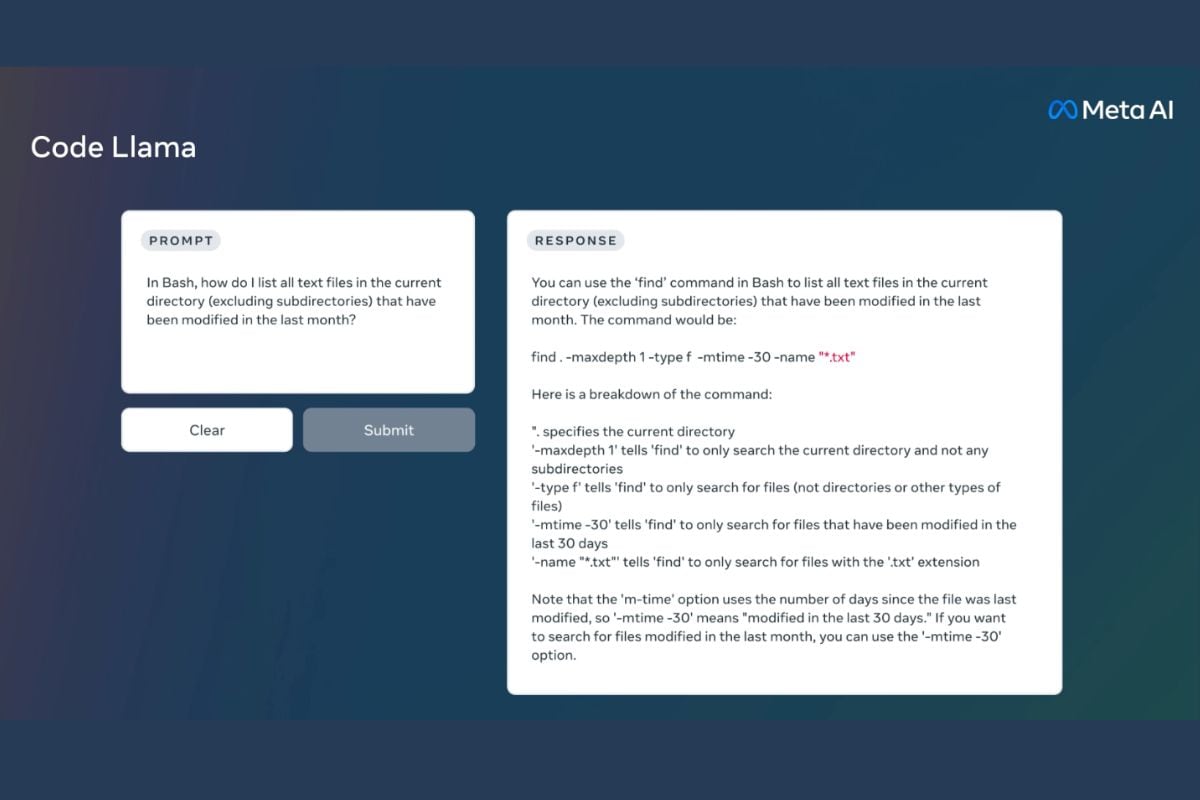

Code Llama 70B is available in three versions — the foundational model, the Code LLama – Python, and Code Llama – Instruct, as per Meta’s blog post. Python is for the specific programming language and Instruct has natural language processing (LNP) capabilities, which means you can use this even if you do not know how to code.

The Meta AI coding assistant is capable of generating both codes and natural language responses, the latter being important for explaining codes and answering queries related to them. The 70B model has been trained on 1 trillion tokens (roughly 750 million words) of coding and code-related data. Like all LLama AI models, Code Llama 70B is also free for research and commercial purposes. It is hosted on Hugging Face, a coding repository.

Coming to benchmarks, the company has posted its scores in accuracy and compared them against all rival coding-focussed AI models. On the HumanEval benchmark, 70B scored 53 percent and on the Mostly Basic Python Programming (MBPP) benchmark, it received 62.4 percent. In both benchmarks, it has outscored OpenAI’s GPT 3.5 which received 48.1 percent and 52.2 percent, respectively. GPT 4 posted only its HumanEval accuracy scores online and netted 67 percent, edging the Llama Code 70B parameter by a fairly healthy margin.

In August 2023, Meta released Code Llama, which was based on the Llama 2 foundational model and trained specifically on its coding-based datasets. It accepts both codes and natural language for prompts and can generate responses in both, as well. Code Llama can generate, edit, analyse, and debug codes. Its Instruct version can also help users understand the codes in natural language.